Understanding, automating, and assisting choice modeller’s workflows through AI

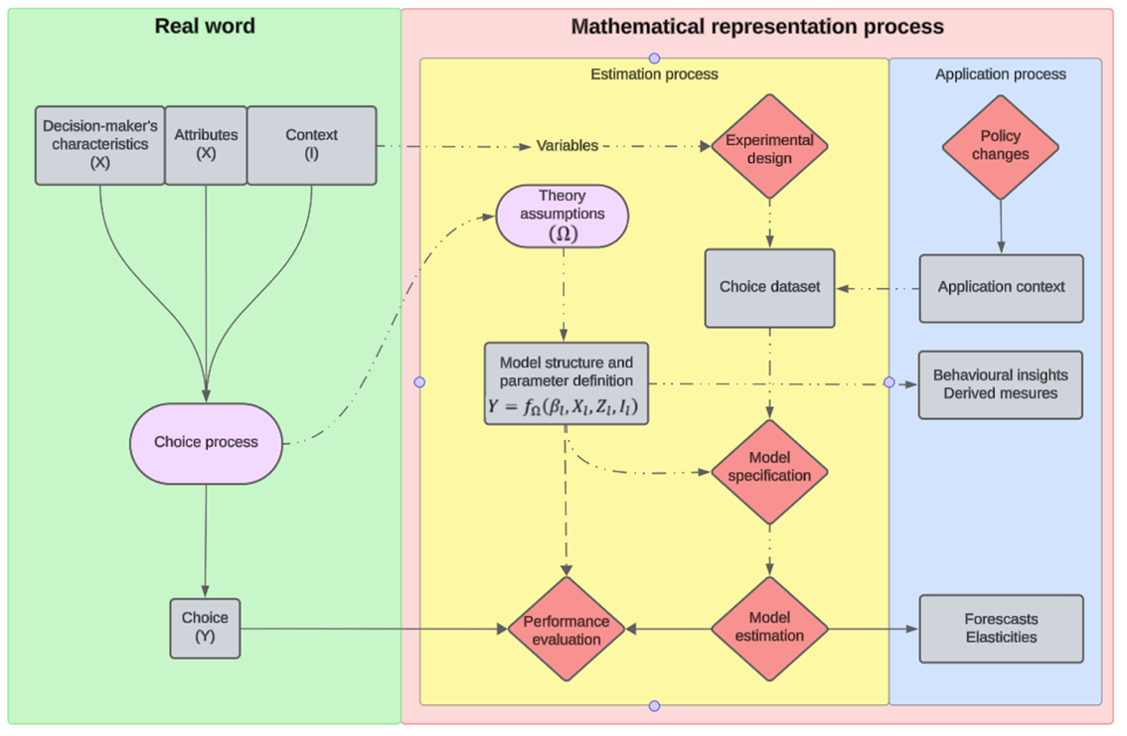

Discrete choice modelling is a robust modelling framework for understanding and forecasting human choice behaviour across various fields. These models are typically used to infer individual preferences, estimate consumers’ willingness to pay, generate behavioural insights that inform policy decisions. Unlike traditional approach, this research is considered as an art form. This perspective arise from the need to blend formal behavioural theories, statistical methodologies, and the judgments of choice modellers up to reporting results in the iterative journey from data collection trough descriptive analysis, models specification, outcome interpretation to the reporting of the results.

Yet, despite their formal structure, choice models remain deeply influenced by </em>choice modellers' workflows</em>. Modellers make a wide range of analytical decisions—data collection, data exploration, model specification, model estimation, interpretation, and reporting. These decisions significantly influence the modelling results! Although this flexibility promotes exploration and efficient methodological progress, it also carries the risk of poor decisions, limits reproducibility, and hamper discussion about good practices in the community.

(3) Delphos: Reinforcement Learning for Choice Model Specification

Our latest project introduces Delphos (paper), a reinforcement learning framework designed to assist analysts in the complex task of specifying discrete choice models. Selecting an appropriate model is often iterative and time-consuming due to the many modelling decisions involved, particularly when exploring nonlinear transformations, capturing observed heterogeneity, and meeting nehavioural expectations.

This project formalises an agent-environment interaction to automate the model specification process. This interaction is modelled as a Markov Decision Process, in which a Deep Q-Network agent sequentially takes actions to constructs model specifications. The environment estimates these models, evaluates their performance, and provides feedback using model fit or behavioural expectations, as depicted in the below Figure:

This work demonstrates how RL can support analysts by exploring broad specification spaces, proposing well-performing models, and reducing repetitive manual effort.

(2) Large Language Models as Modelling Assistants

This project investigates how Large Language Models (LLMs) can assist or collaborate with analysts when formulating discrete choice models. Since LLMs are increasingly capable of generating domain-relevant knowledge, we not only explore the ability of LLMs to propose model specifications, but also to code and estimate them.

This work then examines various prompting strategies, model consistency, and the strengths and limitations of current LLMs for suporting choice modelling workflows. This research positions LLM-based assistants as emerging tools that can accelerate exploratory analysis, support model refinement, and complement human expertise in behavioural modelling

You can find our latest work at:

https://arxiv.org/pdf/2507.21790

(1) Understanding Modellers: The Serious Choice Modelling Game

Our foundational project introduces the Serious Choice Modelling Game, which mimics the actual modelling process. This allows us to capture and analyse the decision-making processes of modellers during the descriptive data analysis, model specification, outcome interpretation, and reporting phases. Participants were asked to develop choice models using a stated preference dataset to inform policymakers about individual willingness-to-pay values.

This setup allows us to observe how modellers behave in practice—how they explore data, specify choice models, and interpret outcomes. The results reveal substantial variation in descriptive approaches, modelling strategies, and willingness-to-pay values. These findings provide an empirical basis for understanding modelling behaviour and highlight the need for tools that support transparency, reproducibility, and methodological learning.

To facilitate future research, the source code and installation instructions for the Serious Choice Modelling Game are publicly available on GitHub. The repository can be accessed at https://github.com/TUD-CityAI-Lab/DCM-SG.

Conceptual overview of the choice modelling research process

MSc theses

-

Exploring the Enhancement of Predictive Accuracy for Minority Classes in Travel Mode Choice Models

Author: Aspasia Panagiotidou (TU Delft) -

Analysis of the Contribution of Explanatory Factors on the Predicted Choice Probabilities Derived from the L-MNL Using the SHAP Method: A Mode Choice Application

Author: Exequiel Salazar (Universidad de Concepción)

Current MSc Projects

We welcome motivated MSc students interested in contributing to innovative research at the intersection of behavioural modelling, machine learning, and human–AI collaboration. Below are some examples of ongoing or proposed topics:

-

Reinforcement learning for discrete choice modelling

Develop and evaluate RL agents that explore specification spaces, incorporate expert knowledge or behavioural constraints, and extend Delphos to models with unobserved heterogeneity (e.g., latent classes, mixed logit). Opportunities also exist for creating new RL environments or improving the agent’s state representations.

-

Immersion in gaming environments

Analyse physiological signals collected while participants play a horror video game on traditional monitors or in virtual reality. Potential topics include identification of visual and audio factors that trigger responses, sequential modelling of physiological reactions, or designing predictive stress-response models.

Gabriel Nova

PhD Candidate

G.Nova@tudelft.nl